April 18, 2025

OK Fine, I’ll Talk About AI [Part 1]

It might be a futuristic hammer, but it’s still a hammer.

Like many of you, I am sick of hearing about AI.

Alternating LinkedIn posts about how it’s the best thing since air or how it’s evil and going to kill us all. What I would much rather see are practical examples of how others use it. I value thoughtful perspectives from smart people.

I hope my readers think I’m a smart people.

And yes, I do get asked what I think about AI, so fine. Let’s talk about it.

But first, I have to get a little crude.

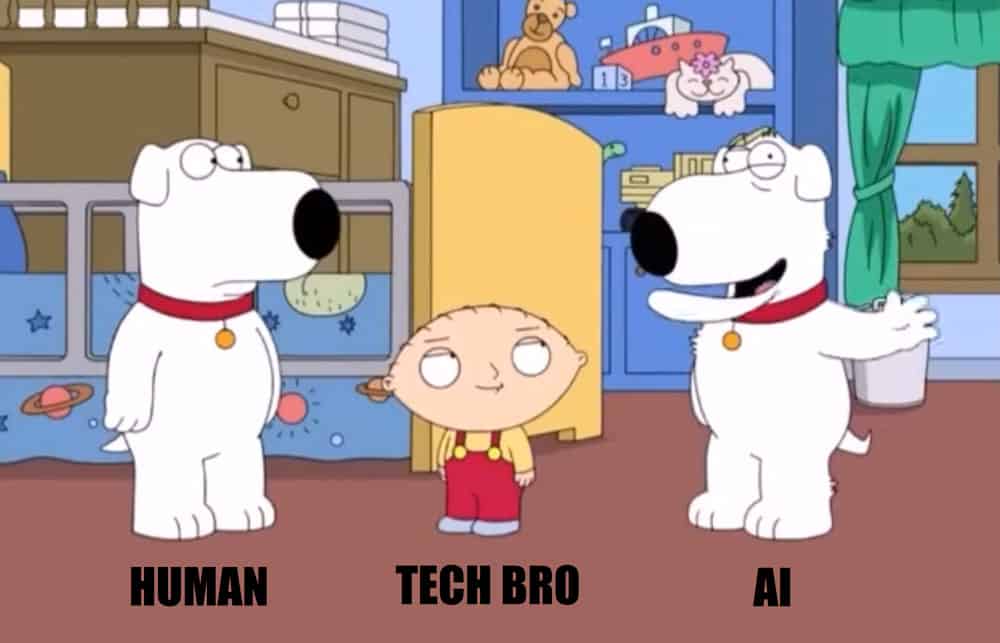

There’s a Family Guy episode where Stewie clones Brian and dials the intelligence WAY back on the clone (“Bitch Brian”).1

Brian: “Did you wash my car like I asked?”

Bitch Brian: “No but I hit it with a rock.”

The plot goes exactly like you’d expect, with badly performed tasks by the clones until they melt down – literally.2

AI is like Bitch Brian.

Building an LLM (Large Language Model) consists of feeding a bunch of information into a machine, and then the machine spits out something new.

When that information consists of every word on the Internet, what it spits out might seem incredibly brilliant (science journals) or incredibly stupid (Reddit). But it is not usually a fully-fleshed out, final product. It needs human finesse because it is not “thinking” and it most definitely isn’t art.

It’s like a calculator programmed with words that can output novel scenarios. My personal view on AI is that it is both life-changing and inane. Useful and dangerous. But that’s not helpful, so I want to discuss a few main points.

In the next post I’ll break down exactly how I use AI every day.3

What You Should Know About Artificial Intelligence:

- AI is not magic.

- The tool should fit the job.

- Efficient use of AI requires actual skill.

- Those skills will become a requirement.

- AI is dangerous, but not in the way you think.

AI is not magic.

AI is not magic.

AI is not magic.

Viewing technology as magic is a major pet peeve of mine.

AI can deliver unexpected results, and the people who built it don’t even understand why it does some of the things it does. But just because you don’t understand something doesn’t mean it’s magic.

It’s just the newest, most disruptive tool in our current phase of human evolution. Like written language. Or the printing press. Radio, TV, the Internet. Now it’s AI.

2. The tool should fit the job.

If AI is a hammer, we’re currently in the “everything is a nail” phase. AI is being mashed into places it doesn’t belong, doesn’t make sense, or is actively dangerous.

It’s like the sriracha sauce of tech products. It’s like catnip for investors. It’s annoying, and eventually things will even out. I hope.

I’m writing all the time. It’s something I want to do, something I need to do. But I live entirely in my head, so I also have to paint. That’s the creation side in the non-screen world; it’s tangible.

I would never allow either of these things to be done by AI. Writing is how you learn what you think, and painting adds an extra somatic dimension that can only be human.

AI should never be promoted as a substitute for personal writing or physical art.

3. Efficient use of AI requires actual skill.

I think it’s helpful to think of AI as an intern.

You wouldn’t give an intern access to the CEO’s LinkedIn page and instructions to write and post every day with no oversight. Or at least, I hope you wouldn’t.

And yet… every day people are using AI content creation tools to post to social. They might be a good starting point, but rarely is the first pass the final pass. See point #1.

Despite what people say, you cannot just open ChatGPT, type something in, and get exactly what you want.

Sure, that might work for simple questions. Which is where people start, which leads to an (incorrect) assumption that it must be like this for everything.

Nope.

If you asked ChatGPT to plan a travel itinerary for an upcoming vacation and were blown away by the results, you might think all those “I just built an app in 5 minutes” videos are realistic.

Alas, no. Those app videos are prototypes. An interactive design that looks real, with no hosting, no database, none of the components that actually make an app an app. And while some of the tools are starting to integrate those things too (and they will definitely improve), you still have to know how the components work to turn it into something real.

On top of that, there are different LLMs and different models within those, all of which specialize in different areas. So it’s not just about knowing how to prompt, but also what model to choose, how to prep, and the most important point which I’ll cover in the next post.

Here’s a funny example.

Photoshop has generative AI built in. I selected the meme I created above and said:

“Make the room furniture behind the two dogs and boy fade to black with subtle flames.”

Here’s what it gave me.

I mean, I guess it’s cool it generated that out of thin air (i.e. stolen image training data), but it’s not at all what I wanted. Clearly that’s not the right prompt and there is something more to this.

It would take another 30 minutes of testing, selecting layers, and manually editing to get something you could drop on social and say I mAdE tHiS wItH aI!

That’s not to says the tools aren’t useful (and this was the wrong way to go about it). In the next post I’ll show you a successful Photoshop example, because knowing when to use a tool and when NOT to use it are also part of the skillset.

4. AI skills will become a requirement.

Those 30 minutes of testing actually aren’t a bad thing, because AI is going to be the next Microsoft Excel in terms of job skills.

Despite what you might see online, the majority of people still aren’t using AI yet, which means there’s opportunity for people who are.

In 2025, it is expected that you know how to type, send email, work with Microsoft Office/Google Suite, find your way around the Internet and manage basic computer tasks and security.

AI use is becoming the next high-demand skill and soon a basic requirement for knowledge workers. I think things will chill out when businesses realize they went too far on AI and actually need humans, but the human workers will still need to know how to manage the AI.4

A lack of tech skills in the workforce has long been a liability, and that trend will continue.

5. AI is dangerous, but not in the way you think.

Most people focus on job loss, and while that’s definitely happening, the risks are much more serious.

AI has absorbed all our human biases, regulation is necessary (and not happening), there are major IP and security issues, normal people aren’t actually using it that much yet, it’s massively resource-heavy, it’s overvalued/it’s a bubble, tech bros are insane…

I have a lot more I could say but I’m trying to keep this high level. My next post will be generally positive, but I want to make it clear that there is a cost, and there are a lot of things to think about. It doesn’t feel right to have the “how I use AI” conversation with first introducing this one.

Whew. I barely scratched the surface and this feels like a lot. Now you know why I split this into (at least) two posts.

What’s one question you have about AI?

If you have specific questions or things you want to know about AI, from any angle, leave a comment and I’ll try to bring some clarity to this brave new world.

Part 2: How I Use AI >

Part 3: The Philosophical Baggage of AI >

Recommending Reading/Listening:

- I Will Fucking Piledrive You If You Mention AI Again – Ludic

- We read the paper that forced Timnit Gebru out of Google. Here’s what it says. – MIT Technology Review

- Tristan Harris Is Trying to Save Us from AI – What Now podcast with Trevor Noah

Footnotes:

1 Here’s the relevant Family Guy clone compilation on YouTube. It’s fun to quote with people who know what you’re referencing.

2 That’s right, an EM DASH ( – ). And I typed it myself!

3 I originally had it all in one post, but it was getting way too long.

4 This is also an opportunity to lean into NOT AI. If you’re reading this on my website, you’ll notice the Not by AI badge…